Anticipating Rare Events of Terrorism and WMD

Anticipating Rare Events: Can Acts of Terror, Use of Weapons of Mass Destruction or Other High Profile Acts Be Anticipated? A Scientific Perspective on Problems, Pitfalls and Prospective Solutions.

Author | Editor: Chesser, N. (DTI).

This white paper covers topics related to the field of anticipating/forecasting specific categories of “rare events” such as acts of terror, use of a weapon of mass destruction, or other high profile attacks. It is primarily meant for the operational community in DoD, DHS, and other USG agencies. It addresses three interrelated facets of the problem set:

- How do various disciplines treat the forecasting of rare events?

- Based on current research in various disciplines, what are fundamental limitations and common pitfalls in anticipating/forecasting rare events?

- And lastly, which strategies are the best candidates to provide remedies (examples from various disciplines are presented)?

This is obviously an important topic and before outlining in some detail the overall flow of the various contributions, some top-level observations and common themes are in order:

- We are NOT dealing here with physical phenomena. Rather these “rare events” that come about are due to human volition. That being the case, one should not expect “point predictions” but rather something more akin to “anticipating/forecasting” a range of possible futures. Furthermore, the difficulties are compounded because these forecasts, to be relevant, have to be done on a global scale and chronologically as far in advance of the rare event occurrence as possible. Therefore, caution is in order when dealing with these phenomena and the reader should approach this topic with a critical disposition and eyes wide open. Hubris is NOT a recommended frame of mind here!

- The reader will be disappointed if she/he expects a linear menu-driven approach to tackle these problems. Brute force approaches are NOT feasible. Or stated differently, the problem set is NOT amenable to a “blueprint” driven reductionist approach! The approach taken here is heavily tilted towards a dynamic methodological pluralism commensurate with the magnitude and scale of the problem set. Emphasis is placed on judiciously incorporating uncertainties and human foibles and attacking the problem set with approaches from various disciplines. These involve analytical, quantitative, and computational models primarily from the social sciences.

- On the other hand, the reader who is disposed to eclectic approaches to this very critical problem set will NOT be disappointed. Inductive, deductive, and abductive approaches will be discussed along side themes from gaming theories. Key to all this is a mix of creative intuition and the age-old scientific method. The concepts from the fields of complex adaptive systems, emergence, and co-evolution all contribute to a sustained strategy. The process of objective multi-disciplinary inquiry is at the heart of any success in this challenging domain.

- The vast majority of the data for such assessments come from open sources. The reader will encounter approaches that take advantage of such data. Equally important is development of an appreciation of the “cultural other” within their own “context” and “discourse”. This is critically important. On the other hand, this massive amount of data (the good, the bad, and the ugly!) brings with it its own share of problems that will need to be dealt with. There is NO free lunch in this business! In all circumstances however, a prudent frame of mind is to let the data speak for itself!

- The points made so far point to a multi-disciplinary strategy as the only viable game in town. On top of that, we don’t expect single agencies to be able, on their own, to field these multi-disciplinary teams. Agile, federated approaches are in order. The reader who approaches this topic with that frame of reference will resonate with the report.

- Finally, and most importantly, rare events, by their very nature, are almost impossible to predict. At the core of this white paper is the assumption that we can do a better job of anticipating them, however, if we learn more about how our brain works and why it gets us into trouble. Although we may not be able to predict rare events, we can reduce the chances of being surprised if we employ measures to help guard against inevitable cognitive pitfalls.

The reader should also be forewarned that the approaches discussed in this paper are by no means a “be all…end all”. All contributors would agree that the approaches presented here are to varying degrees “fragile” and will need further nurturing to reach their full potential.

The reader should not be put off by the size of the report. The articles are intentionally kept short and written to stand alone. You are encouraged to read the whole report. However even a selective reading would offer its own rewards. With that in mind, all the contributors have done their best to make their articles easily readable.

We open up the white paper with an article by Gary Ackerman (1.1). It serves as a scene setter. Gary makes the point about differences between strategic and point predictions; points out general forecasting complexities; and, as the reader will be reminded throughout the rest of report, the limitations of purely inductive approaches (i.e., the happy-go-lucky turkey all year until the unexpected on Turkey Day!). These inductive approaches work best when coupled with other complementary approaches. He makes the point that forecasting must rely on information sharing, extensive collaboration, and automated tools, a point that will be made by other contributors.

From this scene setter, we’re ready to assess how various disciplines have been treating this genre of problem set. We start with an anthropological perspective by Larry Kuznar (2.1). The realization that a broader understanding of culture is necessary to meet modern national security challenges is a “no-brainer” and has naturally refocused attention toward anthropology. Historically, the human ability to use past experience and creative imagination to model possible futures provides humans with a powerful and unique ability for prognostication. The article makes the point that when creative imagination departs from empirically verifiable social processes, and when beliefs in predictions become dogmatic and not adjustable in light of new data, the benefits of prediction are lost and whole societies can be destroyed due to a collectively held fantasy.

From an anthropological perspective, we move to philosophical and epistemological considerations (i.e., what we know and how we know it) by Ken Long (2.2). The paper summarizes recent results in the epistemology of the social sciences which indicate that the employment of case-by-case studies in combination with theories of limited scope involving a select intersection of variables can produce results that are far more useful than more traditional research.

In the following paper (2.3), Carl Hunt and Kathleen Kiernan provide a law enforcement (LE) perspective to the challenges criminal investigators and police officers on the street face in anticipating rare events. Similarities and differences with intelligence analyses are highlighted. The authors discuss the three “I’s” in LE (i.e., Information, Interrogation and Instrumentation) in the context of our problem set and highlight the role of fusion of intuition, probability and modeling as enhancements to the three “I’s”.

We next move to the all important evolutionary theory and the insights it can provide.c In his contribution (2.4), Lee Cronk makes the case that although it deals mainly with the common and everyday, evolutionary theory may also help us forecast rare events, including rare human behaviors. Understanding evolutionary theory’s contributions to this problem requires an understanding of the theory itself and of how it is applied to human behavior and psychology. He describes concepts such as costly signaling theory; i.e., terrorist organizations actively seeking recognition for their acts, giving them an additional reason to send hard-to-fake signals regarding their collective willingness to do harm to others on behalf of their causes. An appreciation of the signaling value of terrorist acts may increase our ability to forecast them. He also describes the concept of mismatch theory; i.e., the idea that much of the malaise in modern society may be due to the mismatch between it and the kinds of societies in which we grew up. And finally he describes “the smoke detector principle” (i.e., early warning system) from the perspective of evolutionary theory.

We conclude this segment of the white paper with a military perspective provided by Maj Joe Rupp (2.5). He approaches rare events from the perspective of “crisis” level events. As such, understanding the nature of crises facilitates a better understanding of how rare events may be anticipated. He goes on to dissect the anatomy of crises by tapping a body of literature on the topic. Rather than view a crisis as a “situation”, the phrase “sequence of interactions” is used. This implies that there are events leading up to a “rare event” that would serve as indicators of that event. Identification and understanding of these precipitants would serve to help one anticipate rare events. From a military perspective, anticipation of a truly unforeseen event may lie solely in maintaining an adaptive system capable of relying on current plans and available resources as a point of departure from which the organization can adjust to meet the challenge of the unforeseen event. In contrast to the Cold War planning construct, he introduces the concept of Adaptive Planning and Execution (APEX) which through technology, uses automatic “flags” networked to near-real-time sources of information to alert leaders and planners to changes in critical conditions and planning assumptions that warrant a reevaluation of a plan. All this calls for “agile and adaptive leaders able to conduct simultaneous, distributed, and continuous operations”.

From here we move on to part 3 of this report to examine fundamental limitations and common pitfalls in anticipating/forecasting rare events. The opening article by Sarah Beebe and Randy Pherson (3.1) discusses the cognitive processes that make it so difficult to anticipate rare events; i.e., the things that help us efficiently recognize patterns and quickly perform routine tasks can also lead to inflexible mindsets, distorted perceptions, and flawed memory.

The next article (3.2) by Sue Numrich tackles the set of interrelated empirical challenges we face. The human domain is currently broken down and studied in a variety of disparate disciplines including psychology, political science, sociology, economics, anthropology, history and many others, all of which, taken as a whole, are needed to understand and explain the human terrain. These factors require an ability to share information in new ways. Moreover, the abundance of information sources in our internet-enabled world creates a problem of extracting and managing the information events and requires a balance between the amount of information acquired and the capability to process it into usable data.

No discussion of rare events is complete these days without due consideration to Black Swans! That challenge is taken on by Carl Hunt (3.3). Carl differentiates between true Black Swans (TBS, the truly unpredictable) from anticipatory black swans (ABS, which may be difficult but not impossible to anticipate), which is more in line with the topic of this white paper. He states that it’s the questions as much as the answers that will lead to success in anticipating what we call Black Swans, regardless of category. Often these questions are posed in the form of hypotheses or assumptions that bear on the conclusions decision-makers take. We must also be willing to let go of prejudice, bias and pre-conceived notions. Carl reinforces a strong theme in this white paper namely that “Intuition and the Scientific Method” should synergize to help in tackling anticipatory black swans.

Having set the cognitive limitations, we next move to discuss remedies. The reader who has stuck with us so far will find the subsequent papers offering some guarded hope. For methodological purposes, we divide the solution space into two categories: front-end and back- end. These are discussed in turn by Fenstermacher/Grauer and Popp/Canna.

Laurie Fenstermacher and Maj Nic Grauer (4.1) start off by depicting the daunting task ahead not just as a “needle in a haystack” but as a “particular piece of straw in a haystack” (the perennial discouragingly low signal-to-noise problem!). They define “Front end” as the group of capabilities to retrieve or INGEST, label or CHARACTERIZE, apply specific labels, extract information, parameterize or CODE and VISUALIZE data/information. Besides the low “signal-to-noise” ratio challenge, there are other challenges such as languages other than English. In addition, the information is more often than not “unstructured” and multi-dimensional. They state firmly that there are no “silver bullet” solutions…but (and dear reader please don’t despair!) there are genuinely a number of solutions. These can do one or more of the above functions and, in doing so, provide a force multiplier for scare human resources by enabling them to use their time for thinking/analysis and not data/information processing. They go on to describe promising capabilities in all front-end categories. Their parting claim is that properly employed as part of an “optimized mixed initiative” (computer/human) system, the solutions can provide a key force multiplier in providing early warning of rare events well to the “left of boom”.

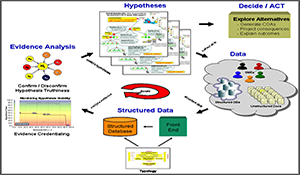

The following article (4.2) by Bob Popp and Sarah Canna provides an overview of the back-end solution space. Stated simply, the challenge here is how to make sense of and connect the relatively few and sparse dots embedded within massive amounts of information. They state that the IT tools associated with the back-end are the analytic components that attempt to meet this challenge and provide analysts with the ability for collaboration, analysis and decision support, pattern analysis, and anticipatory modeling. They go on to make the case that IT tools can help invert a trend – sometimes referred to as the “Analyst Bathtub Curve” – thus allowing analysts to spend less time on research and production and more time on analysis. They illustrate how several such tools were integrated in a sample analytic cycle.

Having introduced the broad concepts of front-end and back-end, we go on to discuss various promising remedies. The first article in this category (4.3) by Bob Popp and Stacy Pfautz discusses the landscape of Quantitative/Computational Social Science (Q/CSS) technologies that provide promising new methods, models, and tools to help decision-makers anticipate rare events. They describes the three main Q/CSS modeling categories; namely quantitative, computational, and qualitative techniques.

Kuznar et al (4.4) follow-up with a discussion of statistical modeling approaches. The challenge here is data sparseness. They emphasize a common theme throughout this white paper; namely that there is no guarantee that conditions and causal relations of the past will extend into the future. Furthermore, in the case of rare events, there is often precious little information upon which to base predictions. They do discuss state-of the-art methods to extract and structure data, impute missing values, and produce statistically verifiable forecasting of rare WMD terrorism activities. A key product from this effort is the identification of key factors (i.e., fingerprints, indicators, antecedents, or precursors) that are statistically related to increases in the probability of rare events occurrence.

We shift gears next with an article by Tom Rieger (4.5) on models based on the Gallup World Poll data This provides the ability to spot areas that are currently or could be at risk of becoming unstable, including pockets of radicalism. These can provide very useful avenues for narrowing down search spaces for origin and destination of rare event activities.

Having presented empirically based approaches to forecasting and the evaluation of the environmental factors from which they arise, we turn our attention next to the use of applied behavior-based methodology to support anticipation/forecasting. The author of this article (4.6) is Gary Jackson. Key to his argument is the assumption that if antecedents and consequences associated with repeated occurrences of behavior can be identified, then the occurrence of that behavior in the future may be anticipated when the same or highly similar constellations of antecedents and likely consequences are present. In the absence of adequate history, Jackson has developed a hybrid approach of behavioral data combined with subject matter expert (SME) generated scenarios. A neural network pattern classification engine is trained and used to generate hypotheses given real world injects.

In the following article (4.7), Sandy Thompson, Paul Whitney, Katherine Wolf, and Alan Brothers discuss a data integration framework for quantitatively assessing relative likelihood, consequence and risk for rare event scenarios. Current methodologies are limited as they are qualitative in nature, based on expert assessments, and elicited opinions. The authors advance structural models to organize and clarify rare event scenario assessments, including qualitative as well as quantitative factors. Benefits to intelligence analysts would include increased transparency into the analysis process, facilitation of collaborative and other parallel work activities, and mitigation of analyst bias and anchoring tendencies. They discuss concepts based on Bayesian statistics and the three primary factors that drive the analysis: the motivation and intent of the group directing the event, the capabilities of the group behind the attack, and target characteristics. They conclude their article by pointing out that the methodologies are intended to address and mitigate bias, reduce anchoring, and incorporate uncertainty assessments.

In the following article (4.8), Allison Astorino-Courtois and David Vona make the case that subjective decision analysis – viewing an adversary’s choices, costs and benefits from his perspective – can provide invaluable assistance to the rare event analyst. Here “subjective” refers to a decision model designed according to how the decision maker views the world rather than the beliefs of the analyst. Such an analysis helps the analyst gain insight into three critical areas; namely motivation, intent, and indicators. The authors argue that such an approach is valuable because it forces the analyst to look at the issue of rare and dangerous events from the perspective of those deciding to engage in them.

In the next article (4.9), Elisa Bienenstock and Pam Toman provide an overview of Social Network Analysis (SNA) and propose a manner in which potential rare events may be recognized a priori through monitoring social networks of interest. In line with a common theme throughout this white paper, they emphatically state that SNA alone is not a sufficient perspective from which to anticipate the planning or imminence of a rare event. To be useful, SNA must be used as a component of a more comprehensive strategy requiring cultural and domain knowledge. They go on to state that SNA is both an approach to understanding social structure and a set of methods for analysis to define and discover those activities and associations that are indicators of these rare, high impact events. In particular, they focus their discussions on two insights from the field of social network analysis: 1) the innovation necessary to conceive of these rare events originating at the periphery of the terrorist network, and 2) the organization of an event and how it generates activity in new regions of the network.

Ritu Sharma, Donna Mayo, and CAPT Brett Pierson introduce in the next article (4.10) concepts from System Dynamics (SD) and systems thinking to explore some of the ways in which this technique can contribute to the understanding of “rare events”. Their focus is on identifying the key dynamics that drive behavior over time and the explicit consideration of feedback loops. Starting with the by now familiar premise that building a model that can accurately predict the future is impossible, they go on to enumerate the benefits attributed to a System Dynamics model to help prepare for, and hopefully mitigate the adverse effects of, rare events such as understanding which rare events generate the most harmful impacts, highlighting the factors and causal logic that would drive a rare event, and lastly identifying the highest leverage areas for focus and mitigation. They conclude by stating that System Dynamics can integrate valuable experience, research, and insight into a coherent framework to help refine and accelerate learning, facilitate discussion, and make the insight actionable by identifying key leverage points and creating a platform to test impacts of various strategies.

The next article (4.11) by Carl Hunt and David Schum advances approaches that harness the science of probabilistic reasoning augmented by complex system theory in support of testing hypotheses for unforeseen events. Key assumptions here go to the heart of the challenge of forecasting rare events: nonlinearity of input and output; the ambiguity of cause and effect; the whole is not quantitatively equal to its parts; and that results may not be assumed to be repeatable. Adaptation and co-evolution are important features in this approach. The article stresses the role of curiosity and discovery in the process of abduction as a formal method of reasoning. We often deal with evidence that is typically incomplete, often inconclusive, usually dissonant, ambiguous, and imperfectly credible. When this occurs, we must apply some sort of probabilistic inference method to assess the effect a particular piece of evidence will have on our decision-making, even when there are no historical event distributions to assess – the authors discuss how this might be accomplished.

Eric Bonabeau in his contribution (4.12) re-states a now-common theme; namely our inability to predict low-frequency, high-impact events in human and manmade systems is due to two fundamental cognitive biases that affect human decision making. These are availability and linearity. Availability heuristics guide us toward choices that are easily available from a cognitive perspective: if it’s easy to remember, it must make sense. Linearity heuristics make us seek simple cause-effect relationships in everything. He makes the case that to anticipate rare events we need augmented paranoia. His corrective strategies include tapping the collective intelligence of people in a group and tapping the creative power of evolution.

At this juncture in the white paper we change course again and discuss the key role of gaming and its role in anticipating rare events. Although games cannot “predict” the future, they can provide enhanced understanding of the key underlying factors and interdependencies that are likely to drive future outcomes. There are four contributions in this section.

The first contribution (4.13), by Fred Ambrose and Beth Ahern, makes the case for moving beyond traditional Red Teams. They state that the conventional use of Red Teams is not to serve as an anticipatory tool to assess unexpected TTPs. Rather they are configured to test concepts, hypothesis, or tactics and operational plans in a controlled manner. They recommend instead modifying traditional red teams by acknowledging the importance of the professional culture of individuals who make up these teams augmented by consideration given to the knowledge, experience, skills, access, links, and training (KESALT) of people who may be operating against the blue teams. This can help alleviate failures on the part of Blue Teams to fully understand the role of “professional cultural norms” of thought, language, and behavior. They introduce the concept of Social Prosthetic Systems (SPS) and the value of integrating it into Red Team formation. SPS can help analysts to understand the roles that culture, language, intellect, and problem solving play for our adversaries as they form planning or operational groups in a manner similar to “prosthetic extensions”. They point out that a red team based on the KESALT of known or suspected groups and their SPSs, can provide much greater insight into the most probable courses of action that such a group might devise, along with the greatest potential to carry out these actions successfully. In addition, such red teams can provide invaluable insights into and bases for new collection opportunities, as well as definable indications and warning. In this sense it can help create the basis for obtaining structured technical support for a blue team or joint task force and lead to unique training opportunities for joint task force officers. Such red team efforts can lead to unique training opportunities for joint task force officers; newly identified metrics and observables that can serve as unique indications and warnings, and even can anticipate both the state of planning and execution and the ultimate nature and target(s) of military or terrorist actions.

In the following article (4.14), Dan Flynn opens his contribution with a theme which is common by now: that standard analytic techniques relying on past observations to make linear projections about the future are often inadequate to fully anticipate emerging interdependencies and factors driving key actors toward certain outcomes. He posits that strategic analytic gaming is a methodology that can be employed to reveal new insights when complexity and uncertainty are the dominant features of an issue. Through strategic analytic games, events that are “rare” but imaginable, such as a WMD terrorist attack, can be explored and their implications assessed. In addition, strategic gaming can reveal emerging issues and relationships that were previously unanticipated but could result in future challenges and surprise if not addressed.

In his contribution to the role of gaming (4.15), Jeff Cares describes an innovative approach to planning for uncertain futures, called “Co-Evolutionary Gaming.” His focus is on complex environments in which trajectories to future states cannot all be known in advance because of strong dependencies among states. He discusses problematic characteristics of existing gaming methods. He then goes on to highlight characteristics of a method for proposed scenario-based planning that more creatively explores the potential decision space. He introduces the concept of Co-evolutionary Gaming as a method of planning for uncertain futures with which players can quickly and inexpensively explore an extremely vast landscape of possibilities from many perspectives.

We end this series on gaming with a contribution by Bud Hay (4.16) who approaches gaming from an operational perspective. Instead of focusing on the worst possible case, he advocates addressing more fundamental issues, such as: What is the adversary trying to achieve? What are his overall interests and objectives? What assets are needed to accomplish those objectives? What are the rules of engagement? What alternative paths are possible to achieve success? He advocates an “environment” first and “scenario” second approach to gaming. He goes on to make the case that operational level gaming can be a valuable technique for planners and operators alike to anticipate surprise, evaluate various courses of action, and sharpen understanding of critical factors regarding potential crisis situations. By examining the congruence of capabilities and intent, it helps decision makers better anticipate rare events. All these can provide a contextual framework from which to postulate likely, and not so likely, terrorist attacks and to evaluate readiness and preparedness against them.

We shift gears again with a series of four articles that will round out the discussion of analytic opportunities in our remedy section. In the first contribution (4.17), Sue Numrich tackles the all- important issue of knowledge extraction. Sue’s point of departure is that rare events of greatest concern arise in foreign populations among people whose customs and patterns of thoughts are not well understood, largely through lack of familiarity. The expert in such cases is someone who has spent considerable time living with and studying the population in question. The problem with relying on expert opinion is that it is only opinion and all observers, including subject matter experts have perspectives or biases. She tackles the issues of how to elicit expert opinion in a manner that adds understanding and not selecting the wrong experts or interpreting their statements incorrectly. She differentiates between SME elicitation and polling and the all important problem of finding the right experts. She goes on to tackle the issue of how best to structure the interviews and understanding the biases (ours and theirs!).

In the second in this series of articles (4.18), Frank Connors and Brad Clark discuss the important role of open source methods. They state the objective of open source analysis is to provide insights into local and regional events and situations. In this sense, open source analysis provides a tipping and cueing function to support all-source analysis. They go on to describe Project ARGUS, an open source capability to detect biological events on a global scale. A total of 50 ARGUS personnel are fluent in over 30 foreign languages. The scale of open source collection ranges from 250,000 to 1,000,000 articles each day with archiving of relevant articles. Machine and human translation is utilized in conjunction with Bayesian networks developed in each foreign language performing key word searches for event detection. They conclude by stressing that combining the open source data with other classified data sets is key to the overall evaluation.

The next contribution (4.19) by Randy Pherson, Alan Schwartz, and Elizabeth Manak focuses on the role of Analysis of Competing Hypotheses (ACH) and other structured analytical techniques. The authors begin with familiar themes for the reader who has stuck with us so far; namely, engrained mindsets are a major contributor to analytic failures and how difficult it is to overcome the tendency to reach premature closure. The authors go on to describe remedies such as Key Assumption Check, Analysis of Competing Hypotheses (ACH), Quadrant Crunching, and The Pre-Mortem Assessment amongst others. Key to their argument is that the answer is not to try to predict the future. Instead, the analyst’s task is to anticipate multiple futures (i.e., the future in plural) and identify observable indicators that can be used to track the future as it unfolds. Armed with such indicators, the analyst can warn policy makers and decision makers of possible futures and alert them in advance, based on the evidence.

We complete the series of articles on analytical techniques with a contribution by Renee Agress, Alan Christiansen, Brian Nichols, John Patterson, and David Porter (4.20). Their theme is that through the analyses of actors, resources, and processes, and the application of a multi-method approach, more opportunities are uncovered to anticipate a rare event and implement interventions that lead to more desirable outcomes. They stress the need for understanding the motivation of actors (Intent), their required resources (Capability), and the multitude of process steps that the actors have to implement (Access). While each of these items may or may not be alarming in isolation, the capability to identify a nexus of individuals and activities spanning the three areas can facilitate anticipating a rare event. However, anticipation becomes more difficult as time approaches the period of an execution. During and prior to the execution phase, the members of the group become OPSEC conscious and tend not leave a data signature. In line with their multi-method approach, they highlight the need for diverse fields such as econometrics, game theory, and social network analysis, amongst others.

At the beginning of this Executive Summary, it was stated that the challenges caused by rare events points to the need for a multi-disciplinary and multi- agency response built on the notions of transparency, bias mitigation and collaboration. The challenge of course is how to instantiate such concepts. This is taken up by Carl Hunt and Terry Pierce (4.21). They propose the integration of a collaboration concept built on a biologically inspired innovation known as the Flexible Distributed Control (FDC) Mission Fabric which can provide a potential substrate for organizational interaction. These interactions will occur through a setting known as the Nexus Federated Collaboration Environment (NFCE) that is described in detail in an SMA Report of the same name. The NFCE concept is adaptively structured, while empowering collaborative consensus-building. It is built on a multi-layer/multi-disciplinary decision-making platform such as the proposed FDC, while maintaining an orientation towards evolutionary design. The NFCE seeks to highlight and mitigate biases through visibility of objective processes, while accommodating incentives for resolving competing objectives. The authors point out that while these are lofty objectives, the means to integrate and synergize the attributes of the NFCE are coming together in bits and pieces throughout government and industry. The FDC is a proposed concept for creating an instantaneous means to distribute and modulate control of the pervasive flow of command and control information in the digital network. It offers the ability to focus and align social networks and organizations. The FDC weaves the virtues of social networking into a group-action mission fabric that is based on collaboration and self-organization rather than on layered hierarchy and centralized control. The article goes on to discuss the engine for executing FDC; i.e. the mission fabric – a new situational awareness architecture enabling collaboration and decision making in a distributed environment. The mission fabric is a network-linked platform, which leverages social networking and immersive decision making. From initial assessments, it appears that the NFCE and FDC may synergize effectively to empower interagency planning and operations.

Jennifer O’Connor brings this white paper to a close with an epilogue (4.22). She provides insightful comments on the whole enterprise of forecasting rare events and the role of identifiable pattern of precursors. She warns against confusing historical antecedents with generative causes. She provides a general framework and set of avenues by which one can think about rare-events-in-the-making in contemporary and futuristic terms. She stresses the need for an almost clinical detachment from our own basic information gathering and processing. She concludes by stating that the strategy of inoculating against “rare events” allows us to move off the well-worn path of generalizations based in the past and alarmist projections.

Two appendices to this white paper provide information relevant to the topic at hand. Appendix A describes a Table Top Exercise (TTX) planned and executed by DNI with support from SAIC and MITRE. It was designed to assess analytic methodologies by simulating a terrorist plot to carry out a biological attack. The Appendix goes on to summarize the main insights and implications produced by the exercise. In Appendix B, we reference an article by Harold Ford titled “The Primary Purpose of National Estimating” which addresses the Japanese attack on Pearl Harbor. It is very pertinent to the topic at hand and is alluded to by various contributors to this white paper.

Lastly, Appendix C provides definitions of acronyms used.

Contributing Authors

Gary Ackerman (U MD START), Renee Agress (Detica), Beth Ahern (MITRE), Fred Ambrose (USG), Victor Asal (SUNY, Albany), Elisa Bienenstock (NSI), Alan Brothers (PNNL), Hriar Cabayan (RRTO), Bradley Clark (TAS), Allison Astorino-Courtois (NSI), Sarah Beebe (PAL), Eric Bonabeau (Icosystem), Sarah Canna (NSI), Jeffrey Cares (Alidade), Alan Christianson (Detica), Frank Connors (DTRA), Lee Cronk (Rutgers), Laurie Fenstermacher (AFRL), Daniel Flynn (ODNI), Nic Grauer (AFRL), Bud Hay (ret), Carl Hunt (DTI), Gary Jackson (SAIC), Kathleen Kiernan (RRTO), Lawrence Kuznar (NSI), Kenneth Long (IPFW), Elizabeth Manak (PAL), Donna Mayo (PA Consulting), Brian Nichols (Detica), Sue Numrich (IDA), Jennifer O’Connor (DHS), John Patterson (Detica), Krishna Pattipati (U Conn), Stacy Lovell Pfautz (NSI), Randolph Pherson (PAL), Terry Pierce (DHS/USAFA), CAPT Brett Pierson (Joint Staff J8), Bob Popp (NSI), David C. Porter (Detica), Karl Rethemeyer (SUNY, Albany), Tom Rieger (Gallup Consulting), Maj Joseph Rupp (Joint Staff J3), David Schum (GMU), Alan Schwartz (PAL), Ritu Sharma (PA Consulting), Steven Shellman (William and Mary, SAE), Sandy Thompson (PNNL), Pam Toman (NSI), David Vona (NSI), Paul Whitney (PNNL), and Katherine Wolf (PNNL)

Comments